Words by Fabio Morreale

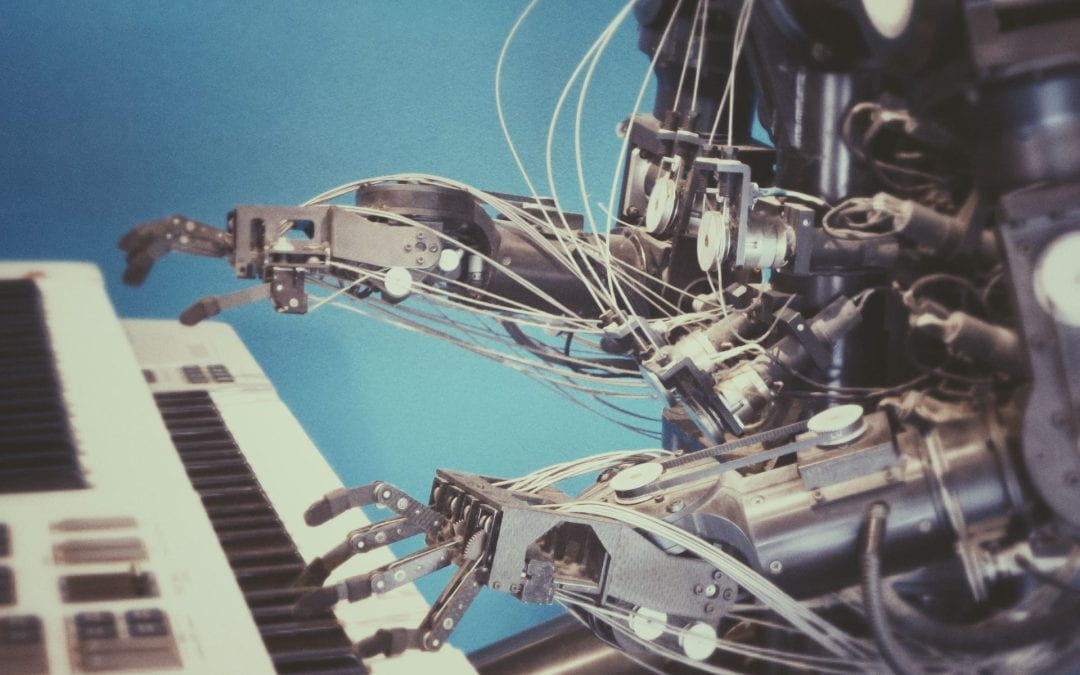

Feature picture by Possessed Photography on Unsplash

Google uses a tool to gather training data for AI-driven applications, such as self-driving vehicles, to how we interact with the internet. Companies may label us all as ‘users’ or ‘customers’, yet that conceals what they’re really doing, says Fabio Morreale, a Senior Lecturer and Coordinator of Music Technology and Director of Research at the School of Music of the University of Auckland.

While debate and concerns about the impact of Artificial Intelligence on our daily lives, work, and recreational activities are increasing, one aspect of it has escaped most commentaries. In order to do as humans do, AI systems have to learn from us. But who is teaching them? Pretty much all of us.

Every day, on multiple occasions, we all feed our humanness into proprietary AI systems by performing “tasks” on our phones or laptops. AI companies are very good at making us work for them without us knowing we’re doing so. Hundreds of small tasks we perform daily when browsing the internet or interacting with our mobile devices are exploited by AI companies to train their systems so they can learn to do human stuff.

My colleagues and I have dug deeper into these exploitative practices. The conclusion of our study The unwitting labourer: extracting humanness in AI training was clear: AI trainers (that is, all of us) are currently being exploited as unwaged labourers.

You may be aware that most AI applications are based on a technique called machine learning. With this technique, a machine can learn how to perform human tasks (eg play chess, create songs, paint) after being fed tons of application-specific data about the human ability it is being taught to emulate. It’s more complicated than what most of us think of as data. Data includes information that is already out there, such as our age, gender, location, and purchasing habits. However, data also includes the result of a myriad of activities that only humans can do that companies sneakily oblige us to perform.

If you are a music aficionado, even if you don’t use Spotify (good on you), chances are that the company exploits your music expertise and taste to improve its proprietary recommendation algorithm

One of the most common of these data-producing tasks is on reCAPTCHA. It is that annoying online test that gets in the way when we are trying to log in to an online service or access a page and – ironically – asks us to prove we are not robots. You might be presented with an image of a road and asked to identify which parts of the picture have traffic lights in them.

This tool was originally implemented to tell humans and bots apart, to prevent bots from accessing the service, but since 2014 Google has been using it to gather training data for AI-driven applications, such as self-driving vehicles. (If you are anything like me and like to trick reCAPTCHA by purposely clicking on a fire hydrant when prompted to identify traffic lights, you might be responsible for future self-driving car accidents.)

Another notable example of activities exploited by AI companies is that of content recommendation systems, one of the most human-blood-thirsty villains in this story. Spotify, for instance, feeds human-produced data into its system to recommend new music to its users. When many users put the same two songs in the same playlist, Spotify’s AI learns that the songs are likely to have something in common and uses this information to curate playlists or suggest songs to its users.

When developing a playlist of ‘happy songs’ for instance, playlist curators can look for songs that people tend to put on playlists called happy and identify the characteristics of what constitutes a ‘happy song’. If you are a music aficionado, even if you don’t use Spotify (good on you), chances are that the company exploits your music expertise and taste to improve its proprietary recommendation algorithm.

The ethical problem with all of these systems is that individuals interacting with them are mostly unaware of these data capture practices and the value they’re adding, and that they are uncompensated despite the massive profit the companies make out of us.

Spotify employs thousands of bots, or crawlers (they are as mean as they sound), to automatically read and analyse all sorts of music blogs and reviews to see how music is being described and what connections people make. In brief, recommendation systems get rich by recommending you stuff they learned about from someone somewhere else, and they can legally extract humanness from this someone, without asking their permission to do so.

By now, you will, of course, have heard of AI systems that generate text, music, visual art and other forms of outputs from a few textual prompts. Chat-GPT has grabbed the headlines this year, but there are numerous systems producing human-like content. Once again, all of this can happen because we – all of us – unwittingly train them to do as humans do.

The training data for these systems comes from datasets of images publicly available on the internet, such as ImageNet, which are “scraped” (automatically identified and downloaded) from websites such as Flickr, YouTube, Instagram, etc. These images include photos taken and art drawn or painted that have been uploaded to the internet by humans (professionals or otherwise).

Even the way we filter the spam in our inbox is a form of AI-training data. With millions of users receiving billions of emails daily, a significant corpus of labelled data is available to classify spam filters, as spam or not spam. This is a form of collaborative filtering similar to a recommendation engine, although it is used to remove content rather than promote it.

The ethical problem with all of these systems is that individuals interacting with them are mostly unaware of these data capture practices and the value they’re adding, and that they are uncompensated despite the massive profit the companies make out of us. Our argument is that providing free labour, creating surplus value for a private company without consensus and compensation is a form of labour exploitation.

Companies may label us all as “users” or “customers”, yet that is a way of concealing the labour exploitation that is underway. The power imbalance between unwitting labourers and technology companies is immense. Our societies have allowed a business model founded on exploitation to proliferate, and it should be in the interests of governments to reorient this imbalance of power.